Designing a New Rust Class at Stanford: Safety in Systems Programming

Writing quality software is hard. Sometimes, software breaks in entertaining ways. However, when software runs everything from personal assistants like Alexa and Google Home to banking to elections, some bugs can be much more severe.

This past quarter, Armin Namavari and I tried teaching a class about how to write software that sucks just a little less. We focused on common problems in computer systems caused by certain kinds of silly (but very serious) mistakes, such as issues of memory safety and thread safety. The core theme of the class was, What are common problems with systems programming right now? How are people responding to those issues? How do those measures fall short? We wanted students to be aware of problems that have plagued the industry for decades, and we wanted to teach students how to use tools and mental models that people have developed to combat those issues. However, these tools are imperfect, and we also wanted students to experience and understand the limitations of such tools to be better aware of what to watch out for when building systems.

In particular, we focused on teaching the Rust programming language as a way to build better habits and combat mistakes endemic to C- and C++-based software. In many ways, Rust requires good practices, and it has an educational compiler with helpful error messages that help students learn. Additionally, we looked at how lessons from Rust can be applied to write better code in C++, and we taught students about tools that can be used to detect common mistakes before they become a problem.

In contrast with a typical security class, we aimed to build a robust software engineering aspect into the course, giving code-heavy assignments and trying to improve students’ processes rather than merely giving awareness about common problems. Our goal with this class was to train students to be better software developers, regardless of what programming language they end up using.

I think the class went quite well, and student evaluations were extremely positive. Even before the quarter ended, students told us that the class was extremely helpful for implementing and debugging assignments in other classes. We hope to teach the class again this coming fall, and are looking for input on how it might be improved.

This blog post aims to be a summary of what we did, why we did it, and what we are thinking about changing for the future. It’s long, but written so you can skip around to whatever is interesting to you. Here’s an outline:

- What is safety, and why should we care?

- Imagining safety education

- Summary of the class

- Survey results

- Takeaways

- General teaching lessons learned

Major thanks go to Armin Namavari for being a wonderful co-instructor, Sergio Benitez for giving an excellent guest lecture, Will Crichton for providing feedback and guidance in designing the class, Jerry Cain for giving us the opportunity to teach and giving encouragement throughout, and Rakesh Chatrath, Jeff Tucker, Vinesh Kannan, John Deng, and Shiranka Miskin for reviewing drafts of this post.

What is safety, and why should we care?

Safety is an unfortunately vague term lacking a great definition, but for our purposes, we’ll say safety is about avoiding harmful mistakes. I view safety as being concerned with the subset of potentially serious bugs: if a button on a website renders as purple instead of blue, that’s a bug we might not care much about, but if bank account software allows users to withdraw the same $1000 multiple times, or if autonomous vehicle software can fail under certain circumstances, that’s a more concerning problem.

Safety is particularly relevant in systems programming because systems

programming is hard. Systems programming often involves pushing the limits of

what hardware can do, and often involves reasoning about the state of multiple

threads sometimes even distributed across thousands of machines. Additionally,

for performance and historical reasons, the majority of systems software is

written in C or C++, which are notoriously difficult to use correctly.

Reasoning about pointers and memory is hard, and C and C++ do little to help.

C/C++’s weak type systems and poorly defined

specifications mean they will happily accept clearly broken code with no

sensible

interpretation.

Even worse, there are countless minefields where the languages’ poor designs

are just begging for mistakes to happen. Simple functions like strcpy, which

copies a string from one place in memory to another, are extremely easy to

misuse and have been the cause of countless security

vulnerabilities.

The strncpy function was introduced to address the weaknesses of strcpy,

yet strncpy turns out to be almost just as

bad. Even

printf can lead to security

vulnerabilities

when called the wrong way.

Also, as systems software provides the foundation on which other software runs,

it’s particularly important to get right. Many real-world examples demonstrate

the severe impact of the aforementioned issues. One of my favorite examples is

presented in Comprehensive Experimental Analyses of Automotive Attack

Surfaces. It’s a great

read, but as a summary, the authors bought a popular car and attempted to find

as many ways as possible to remotely hijack the car without having physical

access. They examined vectors such as wireless key fobs, Bluetooth, and even

the tire pressure monitoring system (which uses wireless signals to transmit

information from sensors in the tires). Every vector was found to be

exploitable, many of them trivially so. For example, the Bluetooth software had

“over 20 calls to strcpy, none of which were clearly safe.” The authors only

looked at the first instance of strcpy, and found that it copies data to the

stack when handling a Bluetooth configuration command without checking the

length of the string. This results in a trivially exploitable buffer overflow

that allows a paired device to execute arbitrary code in the media system.

Since the subsystems in most cars lack isolation, compromising one subsystem

(such as the media player) can result in the compromise of the entire car. In

2015, researchers demonstrated this, remotely killing a Jeep that was driving

on the

highway.

This isn’t just a problem with the automotive industry. Professional programmers across many industries regularly make simple but serious mistakes.

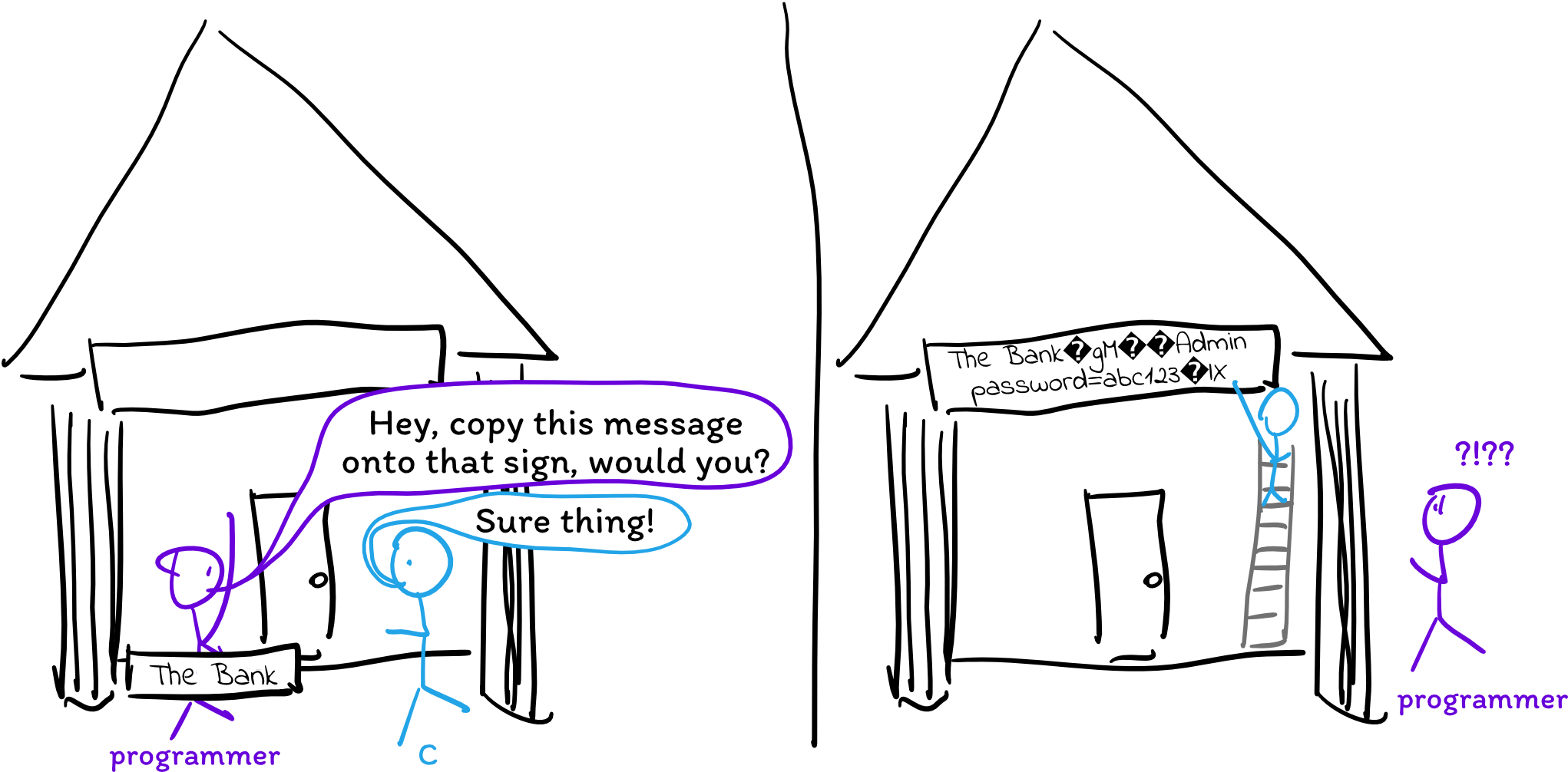

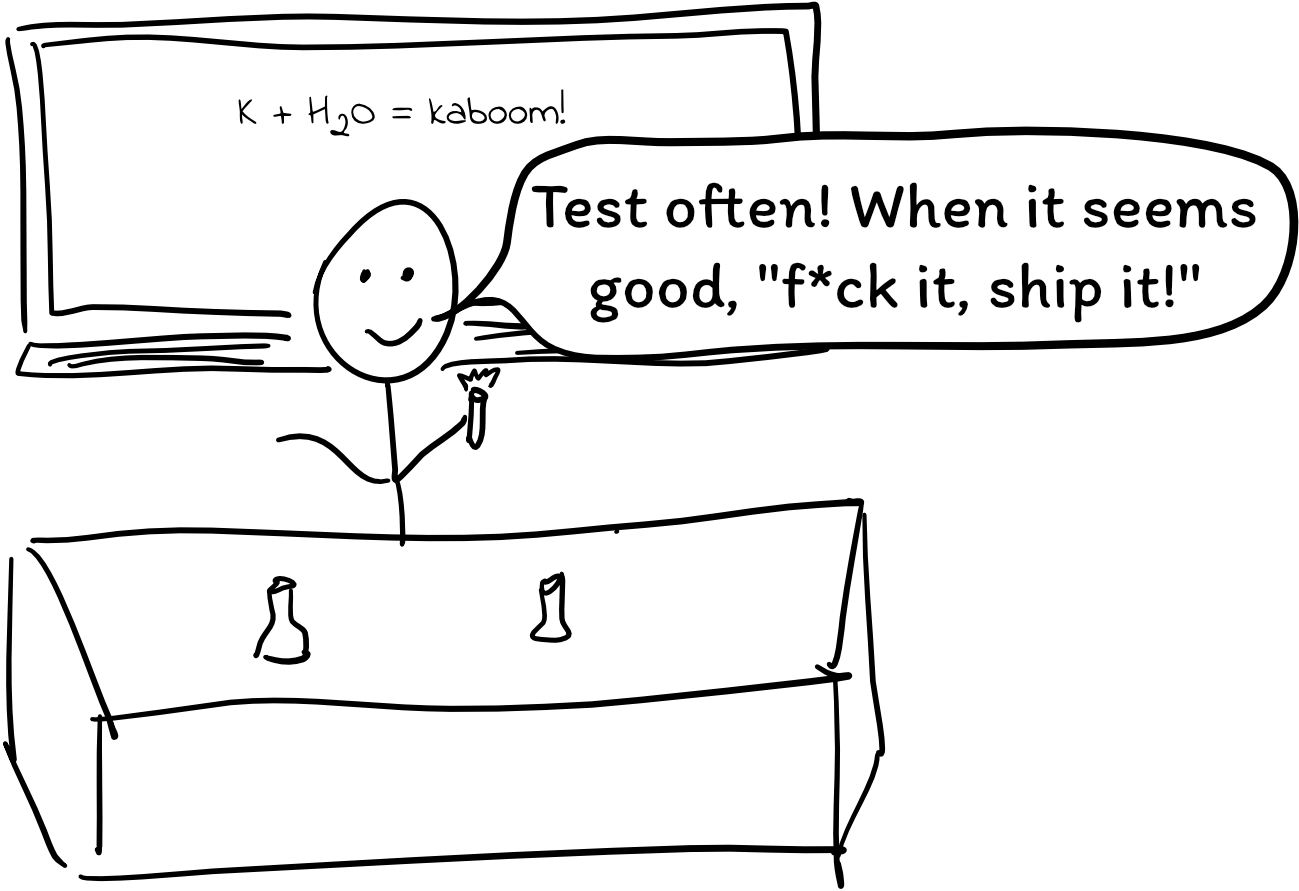

This seems like something we should be talking about. Would you hand a chemistry student a bunch of volatile chemicals that regularly explode in professional labs without a robust discussion of safety? Probably not. Yet that’s effectively what our curriculums are doing. We’re handing students a series of tools that professionals routinely shoot themselves in the foot with, and we aren’t having a substantial discussion of precautions we can take to avoid potentially life-threatening mistakes.

One might argue that the perils of strcpy are nothing like the dangers of a fully-stocked chemistry lab; there’s no danger of students dying in front of the computer here. (Well, we hope.) However, I argue that we deal with dangers on a much larger scale. One line of code can easily affect millions (or billions) of people, and the impacts of our code can be much greater than we realize, even when we aren’t working on software for cars (which we’ve killed people with) or medical devices (which we’ve also killed people with). It may seem that the worst-case bugs in a file sharing server would simply prevent users from sharing files, but one such bug led to the significant disruption of the National Health Service in the UK. Non-critical emergencies had to be refused. It may seem that the worst-case bugs in a web application library would simply take down some websites, but one such bug led to the exfiltration of extremely sensitive data on nearly every American adult with a credit history.

Precautions and safety measures do exist, but people aren’t using them. Part of this may be because the tooling isn’t good enough or easy enough to use. Part of this may be because there hasn’t been enough time to see mass adoption. But I think part of this may also be because of a lack of education and awareness surrounding these issues. We can teach C and C++ and hope that students will learn good habits and learn how to use static analyzers, sanitizers, fuzzers, and safer languages on the job, but then have we not failed them as educators? Seeing that software engineers keep making pretty basic mistakes with critical impact, it seems that something is wrong and we should be trying to do more.

Imagining safety education

So, we should talk more about safety. But how should we go about it?

Should there be a “safety class”?

In planning this class, we couldn’t find any other programming safety class out there. Is that because no one has thought to do it yet, or is it because it is better to teach safety in context alongside more central material?

Particularly because “safety” is so broad, it does seem helpful to cover best practices and helpful tricks/tools while introducing new material. However, there were two reasons we felt it might make sense to teach a class entirely focused on safety.

First, teaching a separate safety class gives us room to experiment with teaching new material that would be difficult to integrate into existing classes. In particular, we were interested in teaching the Rust programming language as a medium to explore the ideas of lifetimes and ownership. Considering the nightmare potential of C and C++, it might be a good idea to teach Rust in the core curriculum in the future, but this is far too big a change to make given that Rust is such a new language and we have little experience teaching it.

Second, although writing secure, correct code often involves avoiding countless unrelated pitfalls, there do exist some common themes in safety. For example, Rust’s ownership model was designed to ensure memory safety, but Rust’s designers found that it also helped promote thread safety.

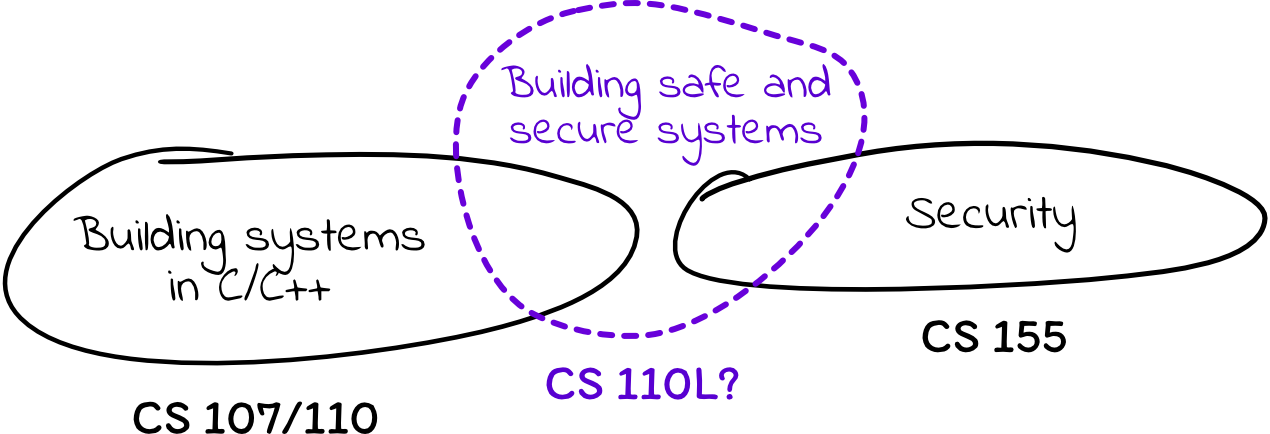

How would this be different from a security class?

As safety is closely related to security, a safety class would have significant overlap with security classes that already exist. Stanford, in particular, has CS 155, which is an excellent security class accessible to undergraduates that already does a great job of covering topics such as memory security and networked attacks. Why should we teach something separate?

Whereas security classes typically focus on teaching offensive techniques and defensive technologies in the context of frequently-broken C/C++ software, we felt it might be beneficial to teach from a perspective that is focused on building systems correctly in the first place. CS 155 often walks students through the game of cat and mouse that has played out over the last thirty years: how do attackers hack into things, and how can we close the loopholes they use? By contrast, we were interested in exploring whether there is a way we can change the ways students approach systems programming so that they make fewer mistakes and create fewer vulnerabilities in the first place. In the first CS 155 assignment, students see for themselves how a buffer overflow or double free can lead to remote code execution, but they may not necessarily develop an understanding of how to write code that is free of buffer overflows and double frees, and they certainly don’t get any practice with it. Can we instill better habits, a stronger sense of danger, and better use of tools so that students write better code off the bat?

How does one teach safe programming?

Teaching safe programming is difficult when there are so many different pitfalls to watch out for. How do we design a class that is more than a checklist of mistakes to avoid?

In our class, we wanted to explore safety using the Rust programming language, which is a relatively new systems programming language that has been gaining traction. There are a few specific reasons we wanted to do so:

- Rust requires many best practices by default and prevents common mistakes

allowed by C and C++. Often, there is only one way to do things, and that way

is the right way. C++ can do many of the same things that Rust can, but even

its safety features are littered with unsafe behavior that is distracting and

confusing to teach (e.g. Rust’s

Optionand C++’sstd::optionaldo the same thing conceptually, but the latter has three ways to extract an inner value, two of which are unsafe). - Rust’s compiler errors are much more educational than those of other languages. The core Rust team has spent a lot of time making compiler messages informative and easy to read, even offering helpful suggestions for fixing things when there is an error. In some cases, programming in Rust is a bit like having a conversation with the compiler, where the compiler teaches you about why what you’re doing is unsafe.

- Lessons learned from programming in Rust are applicable to programming in C and C++. In many ways, Rust is a response to decades of common mistakes in C/C++, and in teaching why the language is designed the way it is, we can discuss many cases of subtly broken C/C++ code and examine how we can do better. Throughout the quarter, a few students in our class mentioned that while working on assignments for CS 110, they felt like they had a little Rust compiler in their heads highlighting ownership errors in their C++ code.

- Rust is an up-and-coming language with good momentum and real potential to replace C and C++ code. I see it being valuable for students’ futures.

In addition to teaching programming in Rust, we also wanted to make sure to talk about how to apply Rust lessons to C++ and how to use tools to improve the quality of C++ code. C++ has added many useful safety features over the last 20 years to help prevent common problems. Also, many static and dynamic analysis tools have also been developed such as linters, sanitizers, and fuzzers that help to identify bugs in programs, and we wanted to show students how to incorporate these tools into a workflow to catch mistakes early in development.

I won’t claim that we figured out the best way to teach safety, but as I’ll discuss later, this approach ended up working decently well. I would love to hear any other thoughts about ways we might approach this!

Summary of the class

We registered CS 110L as an optional two-unit supplemental course to CS 110, which is our second core systems class and covers filesystems, multiprocessing, multithreading, and networking. (The average undergraduate course load is 15 units. A two unit class is expected to require six hours/week of time.)

While it may have been better to make this a standalone class, we registered it as a supplemental class for two reasons:

- It allowed us to more easily experiment with bringing discussions of safety and security closer to the core curriculum, as discussed previously.

- One-to-two unit supplemental classes are more easily approved and allow for more improvisation during the quarter.

This placed some extra constraints on our class, as we needed to ensure the material was relevant to students currently working through CS 110. Balancing conflicting interests was difficult in the beginning, as there was plenty of supplemental CS 110 material that would have been really interesting to talk about but not related to safety, and it was also difficult to schedule the class so that students would have covered all prerequisite material in CS 110 before we talked about it.

Lectures

Lectures were 50 minutes long, taught twice a week over Zoom. Covering our material in fifty minutes was quite challenging, and in the future, we might consider making this a three unit class so that lectures don’t feel so rushed. Also, teaching over Zoom was very difficult at first; I felt like I was just talking at an inanimate camera, making it hard to feel engaged, and I had a hard time pacing and gauging students’ understanding as I couldn’t really see students’ faces. We got better at it as the quarter went on, however.

You can find all lecture materials online here. Here is a high-level overview of what we covered:

Memory safety

Rust relies on an ownership model to prevent memory errors such as memory leaks, double frees, use-after frees, iterator invalidations, and more. Learning how to operate within Rust’s model can be challenging for newer programmers, but Rust simply enforces habits that good C/C++ programmers already use to write safer code.

Alternative approaches to error handling

Students are already familiar with exceptions, so we talked about why

exceptions sometimes fall short, and we discussed the pros and cons of handling

errors via return values, as well as how Rust does this with Option and

Result.

Multiprocessing safety

CS 110 spends a few weeks introducing fork, pipes, and signals. We spent a

lecture and a half summarizing several common problems with using these

primitives directly, and argued that you should use higher-level abstractions

whenever possible. If students ever do need to use these primitives directly,

we wanted them to be aware of what to watch out for.

We spent a lecture doing a case study of how Google Chrome uses multiprocessing to foster isolation between tabs even if attackers discover vulnerabilities in the Chrome sandbox. Students reported that this was one of their favorite lectures, as they got to see how our discussions of virtualization and sandboxing play out in practice.

Multithreading safety

I think Rust’s strengths show the most when it comes to multithreading. Rust’s ownership model, which forbids having multiple references to a piece of data while a mutable reference exists, already helps prevent many classes of multithreading errors. Additionally, Rust’s type system uses “marker traits” called Sync and Send to denote whether classes can be passed between threads and accessed concurrently by multiple threads. Using this type system, the compiler understands that data protected by a mutex is safe to access concurrently, whereas an ordinary buffer is not, and it will not let you write code that has data races.

Networking and system design

We took a brief look at safety from the perspective of systems design: how do you keep important systems running and prevent attackers from compromising data? We talked about load balancing (with load balancers, DNS, and IP anycast) and fault tolerance, and later had students implement a load balancer. We also had an information security lecture that emphasized the importance of securing the path of least resistance (arguing that databases such as Elasticsearch make it too easy to be insecure) as well as the importance of ensuring dependencies are kept up to date.

Nonblocking I/O

We spent a week focused on nonblocking I/O and programming with async/await. This has nothing to do with safety but contributed to the software engineering aspect of the class; in the final project, we asked students to compare and contrast the performance of multiplexing using multithreading with that of using nonblocking I/O. Although it feels like a slight diversion, I think this was a very useful topic to expose students to, as async/await makes the ergonomics of nonblocking I/O easy enough for students to actually use in network-bound applications.

Safety in C++

We briefly looked at how material from the class could be applied to C++ with

C++11, 17, and 20 language features. In retrospect, I wish we had spent more

time talking about how to write good C++ code and how to use tooling to catch

common mistakes. As we explained to students, Rust is a great language and a

great way to learn about better coding practices, but as Rust is relatively new

and most systems codebases are still in C or C++, it’s important to understand

how to work effectively in those languages. Although Rust conceptually maps

well to newer C++ language

features,

the C++ analogs are poorly designed (in my opinion) with many ways to

accidentally screw up (e.g. std::optional does nullopt checks when you use

.value() to retrieve the inner value, but not when you use the * or ->

operators to do that). We rushed through these details, and it would have been

better for students to get practice actually using these features.

Additionally, the C++ ecosystem has so many useful tools for catching mistakes

or bad habits (since the compiler doesn’t do much by default), and we should

have allocated more time to showing students how to use these tools.

Assignments

This class had two kinds of assignments: weekly exercises, which were supposed to be lightweight reinforcement of the material we discussed in lecture each week, and projects, which were more substantial and would give students practice with building more complicated software. We also gave students the option to replace a week’s exercises with a blog post about something they found interesting, and we allowed students to replace a project with a different project more related to their backgrounds or interests (e.g. someone with experience with computer graphics might be more interested in implementing a parallel raytracer in Rust). Sadly, no one took us up on these offers, but we liked having this system in place and it’s something I would like to do again in the future.

We encouraged students to partner up for the two projects. I think this was really valuable for the students that ended up partnering, and the feedback in our surveys was very positive. There is much more design involved in the bigger projects, so it’s helpful for students to be able to discuss and debate ideas, and I think they learned a lot from each other in terms of learning from each others’ workflows and learning how to use nifty Rust syntax tricks.

You can find all the assignments on the course website.

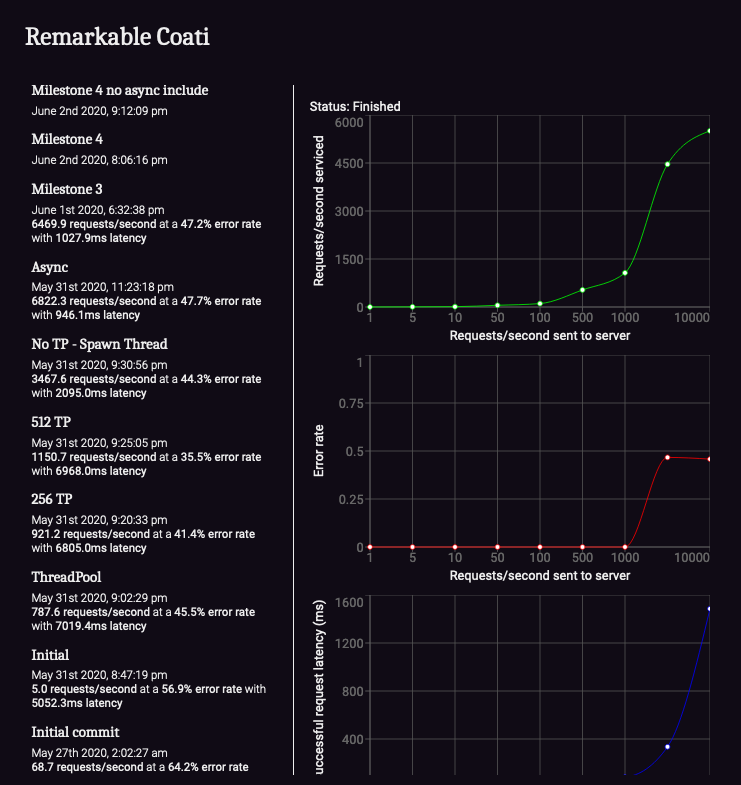

There were too many assignments to succinctly summarize here, but to provide an example, my favorite assignment was the final project, which had students implement an HTTP load balancer called balancebeam. In addition to distributing requests across upstream servers, students’ load balancers were expected to support failover with passive and active health checks as well as basic rate limiting. The primary focus of this assignment was to practice writing safe, multithreaded code in the context of a high-performance network application. As a secondary focus, we wanted students to get practice with evaluating and making software design decisions that impact code simplicity and performance. To this end, we didn’t tell students how to implement any of the requirements (although we did give them a few ideas to start with). Also, we implemented an automated benchmarking tool called balancebench that automatically benchmarks each git commit so that students can see how design decisions affect performance. Is it better to have a thread pool with more or fewer threads? How does nonblocking I/O actually affect performance characteristics? Which synchronization primitives are most helpful for reducing latency? Students were able to see benchmark results throughout their commit history (example here), and a leaderboard also allowed students to compete in a friendly way against each other.

This assignment worked quite well in utilizing almost all the material from the quarter and challenging students to write safe Rust code to build a high-performance application. I think this assignment was open-ended enough that students were able to take ownership and make their own decisions, but not so open-ended that they felt confused about where to start or how to get anything done. The assignment scope was challenging enough to make students really think about how to manage access to shared data structures, but short enough to not be overwhelming. There are some rough edges to iron out with regards to balancebench (most notably, since it is run on instances that are dynamically spun up in Digitalocean, network performance between the instances varies throughout the day, so benchmark results are not the most consistent), but we all had a lot of fun with it. I would love to write a more detailed blog post about this assignment if people are interested.

Survey results

Overall, I’m extremely happy with how the class went. Students reported learning a lot from the class, responding 4.8/5 to “how much did you learn” and “how effective was the instruction you received” on the official course survey, and highly recommended it for other students considering the class in the future.

Based on survey results, our primary weakness was trying to pack too much into a small class. A handful of students noted that lectures sometimes went too fast and assignments were sometimes too ambitious for a 2-unit class. These are totally fair complaints.

In addition to the questions on the official course survey (which are too generic to be helpful), we also did our own end-of-quarter surveying. I’ve excerpted a few of the responses I thought were most interesting/insightful. There is some obvious sampling bias here, as students that didn’t enjoy the class are less likely to leave any feedback at all on an optional survey, but we were very diligent about surveying students throughout the quarter and we this is the best representation we have.

Throughout the quarter, what material stood out to you the most?

The most prevalent topics that come to mind are data ownership, enums, options/results, and synchronization. My proudest moment in CS110L was completing the DEET debugger. That project had culminated so much of the classes most relevant topics up to that point and marked the point where I felt like I was really starting to get a hold of Rust. As for most fun, I’d say that was the proxy project. By that point, we had come to grips with Rust and were able to implement some pretty cool features while putting together a load balancer.

Wow - learning Rust was amazing. The Google Chrome case study was great - talking about unsafe code examples in waitpid was cool, async programming was wild.. so much comes to mind when thinking about this class

Since I had some background in Rust already, I think it really started to hit when we moved into the second half of the quarter. The discussion of Google Chrome & Mozilla Firefox was a highlight – super interesting and it gave good real-world motivation for why Rust is important. The networking section was also very well taught (nice diagrams!) and interesting. Plus, I loved Sergio’s lecture! Would have been cool to use some of his libraries for a project or assignment.

I loved the talk on broader security ideas. Like the time we spoke about chaos engineering and how Netflix uses that to make their systems more robust. I also enjoyed the very beginning of the class where we spoke about buffer overflows

What were your two or three favorite assignments and why?

As mentioned in a previous response, I really liked both projects. Not only did it feel like I was able to really learn a lot from the projects, but the partner aspect helped a ton to make the work experience more enjoyable. Also, they were both very unique in comparison to any other CS assignment I have worked on at Stanford (aside from the 110 proxy assignment as to be expected). We’ve all spent extensive time using debuggers but now we can all say that we’ve made one too! While super simple, I really appreciated the farm meets multithreading assignment [week 6 exercises] because it went a long way to show how far we’ve come from the start of the quarter when we spent hours implementing hangman (lol).

My favorites were Proj 1, because I never really imagined actually writing a debugger and it was cool to see how they work, and the channels assignment, just because channels are really cool and solved the problem very elegantly.

I loved the channel mapreduce assignment because it wasn’t overwhelming, but it was complex enough to get you to really think about your design. I also loved the two bigger projects because of the cool outcomes of both.

Definitely the two projects! It’s just good to get a bit more in the weeds with some more complex programs. Both of the projects were really well scaffolded and the benchmarking tool for Project 2 was great. They felt more polished than some of the CS 110 assignments which is saying a lot for a first-time course! I also liked the Farm meets multithreading one because I remember doing that CS 110 assignment and it was so much easier using multithreading in Rust haha.

What suggestions do you have on what we should do with the class? Teach it again? Integrate other material? Did the safety theme feel worthwhile and did you feel it worked well, or would it have been better to make this a Rust language class instead of a general safety class?

Teach it again 10000000000%. The safety theme fit perfectly because it had me appreciate Rust. The arc of this quarter for me was: I hate rust -> I sorta see why you’d use rust but I hate rust -> Rust makes this concurrency stuff really easy.. -> I want to continue with Rust and use the lessons I’ve learned to make my C++ better. I wouldn’t have had this arc if this was just a Rust language class - I probably would’ve quit since I wouldn’t have seen the purpose. Since you all said it had better safety features, I wanted to see what that was, and so I decided to stay and get through the hard parts. Framing it with safety is a great way to keep people interested / having them to try new things!

This was a great class and so it would be great if it were taught again. I do think the safety theme had value, but I might have preferred losing some of it for more Rust content.

I think I would have liked to see more content about the general safety and how it related to these topics. Rust was an excellent tool to explore these concepts, but I think I enjoyed the safety portion of it more.

I think the class was great. I’m hesitant to suggest too many changes because I wouldn’t want to take it too far from its niche which (I feel) is introducing relatively new CS students to Rust. I think making it a safety class rather than a Rust class makes total sense – much better for motivation. I absolutely think we also need more higher-level classes on these topics though. For example, I wish CS 140E in Rust was an ongoing thing. I think we should probably also have a class that starts where CS 242 / Sergio’s lecture left off – assuming prerequisites in PL, compilers and systems and going from there deeper into language, library and system design.

Takeaways

As mentioned, this class was an experiment in many ways. Here are some of the questions we had wanted to find answers to:

Is there a place for a safety class in a CS curriculum?

Short answer: I’m still not 100% sure!

Long answer: I think this class worked very well and got students to think more carefully and critically about the code they write. I think some material could be worked into core CS classes instead of being in a separate class (e.g. we could talk about channels in CS 110 and teach students how to use sanitizers to check for potential race conditions), but having a separate class was extremely helpful for experimenting with bigger changes, such as teaching Rust. As core classes improve, there may be less of a need for a safety class, but I think there will always be a place for a class that looks at what is often going wrong in systems and how we can improve practices to build better systems.

What’s it like for students to learn Rust in a short time frame?

As people usually say, Rust has a steep learning curve, and it’s really hard to get productive with it in a short amount of time. This is reflected in the 2019 Rust language survey, and it’s also reflected in the student frustration in the first few weeks of our weekly survey responses. Despite this, even with only a few weeks, it’s possible to get students to a point where they are building software they are proud of.

We did a few things that I think helped with this:

- Distill Rust as much as possible into a few crucial features, and focus only on those features. Rust has such a huge language surface that if we had wanted to teach Rust thoroughly, there wouldn’t have been time for anything else. We explicitly told students “this is not a Rust class!” in order to help set expectations.

- Motivate why Rust is the way it is using simple explanations and examples.

Students often get frustrated by the language being difficult, because

seemingly simple things that are normal in other languages are sometimes not

allowed in Rust. Acknowledging this frustration and explaining why

rustcis complaining helps students to get on board more quickly, and it also helps them to recognize potentially problematic patterns when they are programming in other languages. - Avoid mentions of confusing terminology when possible. Rust has a lot of official terminology, sometimes coming from PL theory or functional programming, neither of which our target audience has been exposed to.

Should we try to incorporate Rust into the Stanford core curriculum?

Probably not, not yet.

Stanford has two core systems classes: CS 107 (which introduces C programming) and CS 110 (which teaches multiprocessing, multithreading, and networking in C++). Rust sits weirdly in between the two classes. The ownership model definitely fits better with CS 107 material, but a large part of Rust’s appeal is in its effectiveness in programs that use multithreading and/or networking, and it may be frustrating to spend so much effort fighting the borrow checker without understanding concurrency-based motivations for it.

While I think Rust would be poorly motivated in CS 107, I think it is extra

poorly suited for CS 110. CS 110 is not just about how we do things, but also

why – why are things designed the way they are, and if we get certain bugs

or performance characteristics, why is that? I don’t think the why comes

through enough in Rust. It presents you with a philosophy and says, “follow

this philosophy and it will keep you safe.” Consequently, there isn’t room for

deviation in thought. You can’t experience why not, because the compiler

won’t let you. And although it will try to explain why not via error messages,

seeing is believing. Watching a program crash and burn isn’t the same as

getting a one or two line compiler error that explains how you deviated from

the rust model. Teaching about

multiprocessing is also difficult in Rust, as the standard library has no way

to call fork, and signal handling support is similarly non-existent. Rust has

good abstractions that work in the common case, but a good systems programmer

should know how they work under the hood, and it’s hard for students can’t

really build intuition for what’s happening in the machine if it’s plastered

over with abstractions.

I also think it’s hard for students to appreciate Rust without having first experienced C and C++. Two students put it well:

I don’t really agree [that Rust would be a better first language]. Coding in C++ for 110 allowed me understand the errors that Rust was protecting me from even MORE. If I just trusted Rust, I would have bug-free programs, but I wouldn’t understand what it was protecting me from. For example, in Assign5 for 110(aggregator), a common error was passing reference to data that would go out of scope to a thread (an error rust would never allow due to lifetimes). When I ran into that error, things quickly clicked. I remembered that I should be thinking of lifetimes myself in C++, just as Rust had done for me. Seeing both languages together throughout the class just made me understand the errors more deeply, which I think I would’ve lost if 110 was only taught in the safer language.

I don’t think rust is a good teaching language for almost the opposite reason I don’t think python is a good language for teaching – you need to learn programming in a language that can screw you over, that way you learn better habits. Rust is nice once you’ve gotten your feet in the water with a language like C and you’re painfully aware of the damage that it can do. My partner and I were talking about it during the last assignment – the rust compiler almost makes some parts of assignments too easy. Perhaps from a learning standpoint that’s nice – you don’t want students spending hours over stupid memory bugs, but I err on the side of making them cautious vs making them reliant on a strong compiler.

Especially since systems code today is still dominated by C and C++, I think it’s invaluable for students to be able to appreciate the guarantees of Rust while still being aware of what the compiler is doing for you and protecting you from.

Should this be a standalone class, or should it remain an addon to CS 110?

I’m not sure about this one. I’m thinking about offering this class again, and I’m debating whether to offer it in the same form, or to make it a separate class.

On one hand, registering it as a separate class would allow us to focus on safety topics without needing to follow along with the CS 110 syllabus. This flexibility would be extremely useful for being able to spend more time on C++ safety and tooling, since we would be able to move our discussion of multithreading to sit earlier, leaving more time at the end of the course, without worrying about running ahead of CS 110. Additionally, I think it would be good to dedicate more units to the class, since it took more time than the average two-unit course.

However, I think making it a small, two-unit class made it more accessible to more people, and I think the two classes ended up complimenting each other really well (CS 110L made it easier for students to implement and debug CS 110 assignments, and CS 110 gave students better context and appreciation for the problems we discussed in CS 110L).

If you have thoughts on this, let me know.

General teaching lessons learned

We tried out a few things this quarter that I really liked:

- Since this course was taught online due to COVID-19, we tried to find ways to create the community that would have ordinarily developed between people waiting for lecture to start or working on assignments during office hours. We had a course Slack for Q&A, announcements, and random news articles related to course material, and I think this worked very nicely, partially because of our small class size. In that Slack, we created a #reflections channel and prompted students each week to post about things they found interesting and/or were struggling with. This helped students to see that they weren’t alone in finding aspects of Rust confusing, and it helped us identify what material students were struggling with. We also hosted Not Office Hours, which was a casual Zoom call dedicated for anything except course material. I enjoyed getting to know students better through this, and in our surveys, students commented that they enjoyed getting to know each other as well.

- As mentioned, we encouraged students to replace weekly exercises with a blog post or projects with an alternate idea. Even though no one ended up doing this, we did talk with a few students about ideas they had, and I think it’s nice for students to have this option available.

- We had weekly surveys and were constantly soliciting feedback. This can create a lot of noise if not executed well, but I think this was helpful for us to improve the class during the quarter, and it was also helpful for getting quality input (instead of polling for feedback at the quarter’s end, when students have already forgotten all the specifics of things they liked/disliked and just want to move on).

I also think I learned a lot about assignment design during this class. It was interesting learning how to balance teaching things in class and having students learn it on the assignments. You can’t teach everything in lecture, and it’s important for students to synthesize material for themselves. It’s more rewarding and effective if you can guide them to discover things on their own during an assignment – they’ll remember the journey much better than they remember snippets from lecture. But of course, this makes assignments more difficult and time-consuming, and can lead to bad outcomes if students fall off the path you had in mind. It was also interesting learning how to balance scaffolding in an assignment. Including scaffolding (e.g. skeleton functions and unit tests) makes assignments run smoother, but it also reduces the space for student creativity. Throughout this class, we had a mix of assignments. Some students said they appreciated the scaffolded exercises, and pretty much everyone reported spending less time on those assignments, but other students said they liked the open-ended exercises better.

Conclusion

Safety is a big problem in systems programming, and we think it makes sense to have a class teaching students about common pitfalls, how to avoid them, and where modern tools may fall short.

You can find the full course materials on the website here.

Putting this class together was really difficult. We designed the class (including lectures and assignments) from scratch, and there weren’t really any similar classes to borrow existing curriculum from. We needed to adapt to an online format with short notice, and students faced instability throughout the quarter due to COVID-19 and national protests. Additionally, I hadn’t actually ever written any Rust code until three weeks before the class started.

However, this was an incredibly rewarding experience, and I learned so much. We hope to revise and teach the class again.

Please contact me if you have any thoughts or feedback on this class. I am just a lowly grad student with little substantial experience building computer systems, and I think this is a challenging topic to teach, so if you have any comments at all, please email me and I would love to have a discussion!