Assignment 4: Internet Archive

The Internet Archive maintains one of the largest archives of websites, books, movies, and more. Their Wayback Machine allows you to enter a website’s address and see how it looked at various points in the past. (See how Stanford’s website looked in 1996.)

In this assignment, you’ll build a simplified version of the Internet Archive. Given a website to archive, your program will crawl through the network of pages it links to and resources it uses, archiving them to disk. You will build this in several phases. The first version, a sequential version with no multithreading, will take quite some time to finish archiving a website, due to the amount of time each network request takes. You’ll then speed it up using your understanding of multithreading and synchronization, and then you’ll build a thread pool to optimize your thread usage.

Due date: Wednesday, August 1st, 2018 at 11:59pm

What the finished product will do

You’ll be building an archive executable. Given a seed website, this will

begin downloading that website, any websites it links to, any websites those

link to, and so on. Because downloading this network of interconnected websites

could end up downloading a fair portion of the internet (depending on your seed

website), you are restricting your downloads to a whitelist, so we only

download from particular websites of interest.

To download Stanford’s website, we can run the following. (The seed website is

https://www.stanford.edu, and we are restricting to sites on the domain

www.stanford.edu. You could use a wildcard and whitelist *.stanford.edu,

but this ends up indexing a huge number of pages, and it takes a very long time

to download. You can whitelist multiple different domains by using multiple w

flags.)

./archive -w www.stanford.edu -d https://www.stanford.edu

This produces the following output:

[24-07-2018 08:07:00] Beginning download of https://www.stanford.edu

[24-07-2018 08:07:01] End download of https://www.stanford.edu (1.104319 seconds)

[24-07-2018 08:07:01] Skipping download of https://fonts.googleapis.com (not whitelisted, or blocked by robots.txt)

[24-07-2018 08:07:01] Skipping download of https://s.w.org (not whitelisted, or blocked by robots.txt)

[24-07-2018 08:07:01] Beginning download of https://www.stanford.edu/wp-json/

[24-07-2018 08:07:01] Beginning download of https://www.stanford.edu/wp-includes/wlwmanifest.xml

[24-07-2018 08:07:01] Beginning download of https://www.stanford.edu/xmlrpc.php?rsd

[24-07-2018 08:07:01] Beginning download of https://www.stanford.edu/wp-content/plugins/awesome-weather-pro/awesome-weather.css?ver=4.9.7

[24-07-2018 08:07:01] Skipping download of https://fonts.googleapis.com/css?family=Open+Sans%3A400%2C300&ver=4.9.7 (not whitelisted, or blocked by robots.txt)

[24-07-2018 08:07:13] Skipping download of https://www.stanford.edu/wp-content/plugins/awesome-weather-pro/js/js-cookie.js?ver=1.1 (already downloaded)

<many lines omitted...>

[24-07-2018 08:07:13] End download of https://www.stanford.edu/list/admin/#admin-finance (0.323541 seconds)

[24-07-2018 08:07:13] End download of https://www.stanford.edu/list/admin/#admin-research (0.318452 seconds)

[24-07-2018 08:07:13] End download of https://www.stanford.edu/list/admin/#admin-staff (0.314381 seconds)

[24-07-2018 08:07:13] End download of https://www.stanford.edu/list/admin/#admin-students (0.317646 seconds)

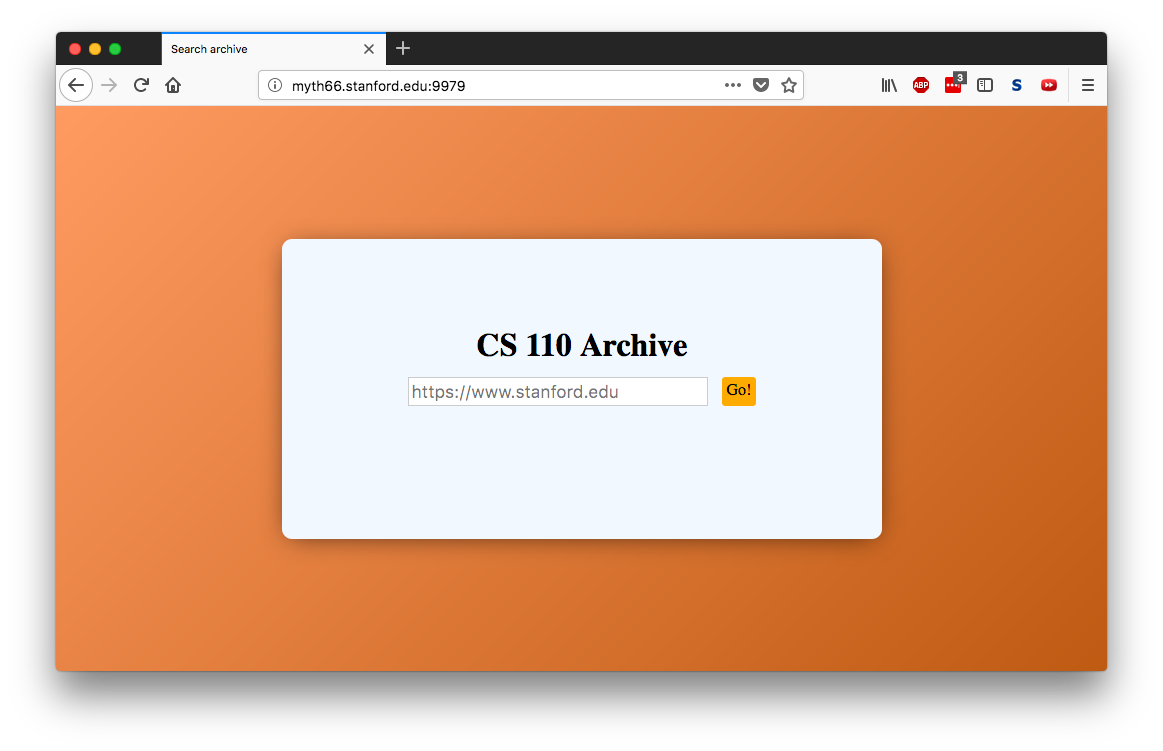

myth66.stanford.edu listening on port 9979...

After crawling a ton of www.stanford.edu pages, this launches a server; the

last line tells you where to connect. (It will be different for you.) If I

connect to http://myth66.stanford.edu:9979 while leaving archive running, I

see the following:

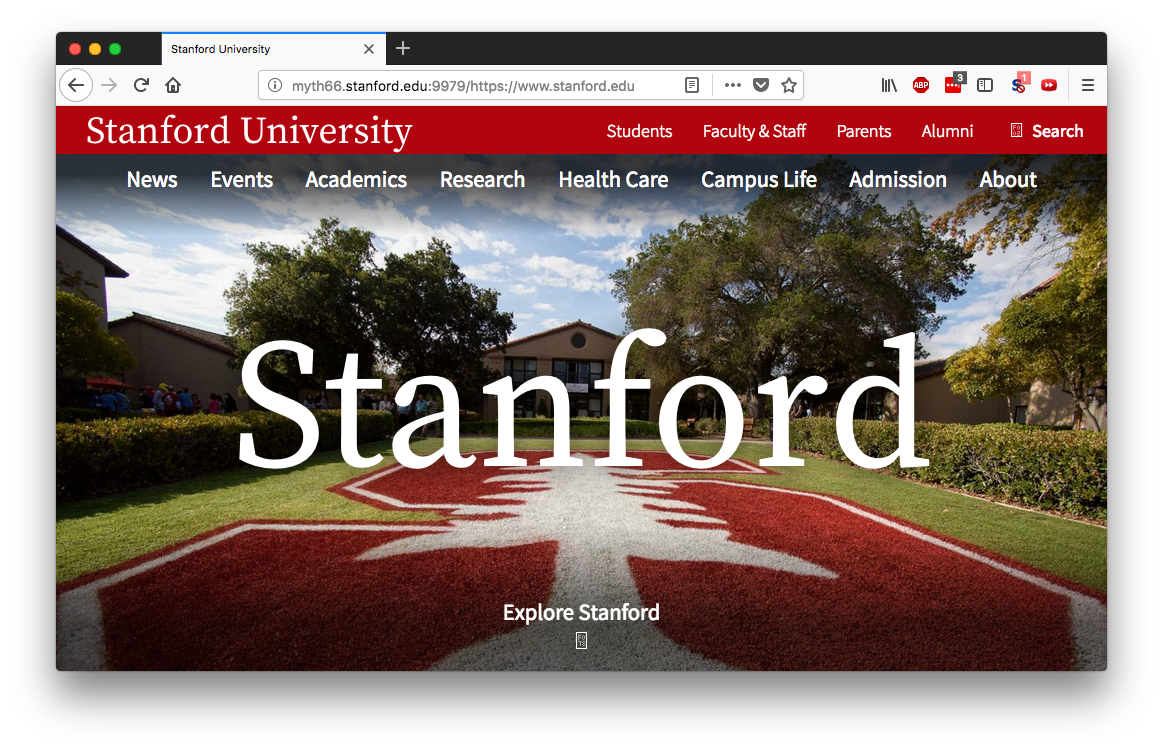

I can enter https://www.stanford.edu, click “Go!”, and see Stanford’s

homepage, in all its glory:

I can click around on the links, and everything works, as long as I only click

links pointing to www.stanford.edu sites (the domain I whitelisted). Even if

Stanford’s website goes offline, this archive can still continue serving it as

if nothing had ever happened.

Program usage

You may want to play around with ./samples/archive_soln before you begin, just to get a

sense of what you’re trying to do here.

The

-dspecifies the seed page that you’d like to begin crawling from. You should specify a full URL, including http/https. For example:./archive -d https://web.stanford.edu/class/cs110/summer-2018/You can only specify one

-dflag, but if you want to index several pages, you can runarchiveseveral times. (The files it downloads are persisted in theindexed-documents/directory.)Add

-wflags to whitelist domains for our crawl. You can specify multiple-wflags, and you can use wildcards if you’d like (though that may include many more pages than you want). For example:./archive -w "*.stanford.edu" -w fonts.googleapis.com -d https://www.stanford.eduWhile you’re building

archive, you may just want to see if it runs correctly, and you may be less interested in browsing the pages it downloads. You can add the-nor--no-serveflag to disable the server. (Your program will exit after downloading the pages.)archivestarts the server with a port number generated from your sunet ID. It’s unlikely anyone else will conflict with your port. However, if you want to run the server on a different port, add the-por--portflag:./archive -p 12345

Important note: archive saves downloaded files in the

indexed-documents/ directory. Running archive several times will add to

this database without clearing it. This allows you to crawl several different

websites and have them all be accessible from your archive web server, but it

might not be what you want in testing.

If you want to start fresh, run make filefree to clear the

indexed-documents directory. You can also run archive with the -m flag to

make it memory-only (it won’t read from disk or persist downloads on disk).

Instructions for off-campus students

As shown above, this assignment features a web server that shows the pages your

program has archived. We’re running this server on ports 2000-65535, but

unfortunately, for security reasons, the myth machines only allow access to

these ports from on-campus computers.

You have several options:

- You can run

archivewith the web server disabled. When you runarchive, add the-nimflag.archivewon’t run the web server, but will spit out a list of downloaded content that you can check for correctness. This is the easiest option of this list, but you miss out on the cool factor of being able to use your archive from your browser. Use an SSH proxy. SSH has a feature that allows us to send traffic to an SSH server, and it will forward that traffic to a web server. If we SSH into a Stanford computer, we can then use that computer to forward web requests to your

archiveserver.- Launch

archive. Let’s say my server is listening onmyth55port9979. - Open an extra terminal window and

ssh -L 9979:myth55.stanford.edu:9979 rice.stanford.edu. Leave this running on the side. (Make sure this is running in a separate terminal window from the one you’re using to connect to myth, and make sure it continues running while you try to usearchivewith your browser.) You should replacemyth55with whatever serverarchiveis running on. - In your browser, go to

http://localhost:9979.

This method might be a little annoying if you have a bad network or frequently sleep your computer (logging into

ricerequires 2 factor authentication), but it is probably the easiest way to be able to use your browser witharchive.By the way, you may be wondering, why not use SSH into

mythand have myth forward the traffic toarchive– why userice? That would definitely be more familiar and straightforward, but unfortunately,mythhas SSH tunneling disabled.- Launch

Connect to the campus network using a VPN; instructions are here. If you feel like taking the time to install the VPN client and get everything set up, then this will probably be the easiest option in the long run. (I haven’t taken the effort to set it up, though, so I can’t really tell you what it’s like.)

Use the terminal-based

lynxbrowser. Open up another terminal window, SSH intomyth, and then run something like this:lynx http://myth66.stanford.edu:9979/https://www.stanford.eduIf you don’t care much for the interactivity of

lynx, you can ask your server for a single page by usingcurl:curl http://myth66.stanford.edu:9979/https://www.stanford.eduThis simply downloads the page from

mythand prints it out on your terminal window. If you get anything back, yourarchiveis probably working correctly. (Errors in the page output probably come from the crawling framework that I’ve written for you.)

A note on robots.txt

If you look at the sample output of archive that I posted above, you’ll

notice that some downloads were skipped. A download from fonts.googleapis.com

was skipped – this makes sense, because that was in our whitelist. A download

from www.stanford.edu was also skipped – this makes less sense.

If you try downloading a website, and you notice that everything is being

skipped even though you’ve whitelisted it, it’s possible this is happening

because of robots.txt. This file is used by website administrators to tell

crawlers (like us) not to download particular parts of the website. You can see

it by going to /robots.txt on any website. For example, I tried using

archive to download Linux man pages, but I ran into issues. When I checked

https://linux.die.net/robots.txt, I saw that everything was disallowed for

our crawler.

Getting started

Clone the repository that we’ve set up for you by typing:

git clone /usr/class/cs110/repos/assign4/$USER assign4

Compile often, test incrementally and almost as often as you compile, run

./tools/sanitycheck a bunch to test your work, and run ./tools/submit when

you’re done. As you’ve seen in past assignments, you can play with a sample

application by invoking ./samples/archive_soln.

A note on this assignment

This is a new assignment, and it was significantly harder to write than I anticipated. I’ve tried very hard to polish it for you, but you will inevitably find bugs. Please report any issues you encounter on Piazza, and please pay extra close attention to Piazza; I will post there about any common issues that come up.

Files

archive.h/cc: This is the main (and possibly only) file that you’ll be

working on for the first part of the assignment. A skeleton is in place for

you, but you need to implement Archive::download to crawl the internet

starting from seed URL.

Feel free to add static functions, or to add private methods to Archive. My

solution adds several.

document.h/cc: This contains the Document class, used to represent web

pages that you encounter. You’ll create a Document for every URL you consider

downloading. Call Document::download to download a web page and parse it for

any links to other web pages, and call Document::getLinks to get those

outgoing links.

You can modify this file if you’d like, but you won’t need to.

index.h/cc: This contains the Index class, used to store all the

Documents you download, and used to implement the archive web server that you

use to access downloaded web pages. You’ll need to call Index::addDocument.

You can modify this file if you’d like, but you won’t need to.

whitelist.h/cc: This class implements access control to the URLs you might

try to access while crawling the web. Every whitelisted URL (specified by -w

command-line arguments) should be added to the whitelist by calling addHost.

Before you download a URL, you should call Whitelist::canAccess to check

whether you’re allowed to access the URL. (This checks both the whitelist from

the -w flags, and it does some much more complicated checking of robots.txt

files to see if we’re allowed to access this specific URL – see “note on

robots.txt” above.)

You can modify this file if you’d like, but you won’t need to.

log.h/cc: This contains a Log class that you can use to produce the

logging messages you see in my sample output. You don’t need to use this class

if you want to use your own logging – we aren’t grading you on your terminal

output format – but it’s easy to use and may save you time, so you should read

the interface in the log.h file.

You can modify this file if you’d like, but you won’t need to.

thread-pool.h/cc: This is where you’ll implement Milestone 3 of the assignment, which is a way to use multiple threads in a more efficient way.

tptest.cc: This is a trivial program that verifies basic ThreadPool functionality. We call this from sanitycheck to see if your ThreadPool is working. Don’t change this file.

tpcustomtest.cc: This contains a collection of functions you can use to test your ThreadPool yourself. This is for you to play with and add as many additional tests as you can think of to make sure your ThreadPool is working brilliantly.

Assignment roadmap

This assignment is broken into four milestones. We are only grading your final version, using multithreading with a ThreadPool, so if you want, you can skip the earlier versions. However, we highly, highly, highly recommend that you don’t skip milestones unless you’re extremely confident in your multithreading abilities. Working in these steps will allow you to get a working product by the end of Milestone 1 and then work incrementally to ensure you don’t break anything.

Milestone 1: Sequential version

Implement Archive::download. Given a URL, create a Document from that URL,

download() the Document, call getLinks to get a list of outbound links

from the document, and then repeat the process with those links. Add each

document to an Index.

You must meet the following requirements:

- You must never download the same URL twice. (It’s fine to create two

Documentobjects with the same URL at some point, but you should never actually calldownload()on aDocumentif you’ve already downloaded a document with the same URL.) - You must call

Whitelist::canAccessbefore callingdownload()on a document, and you shouldn’t download the document ifcanAccessreturns false. - Sometimes downloads can fail. (The network might have issues, the destination

server might be down, or there might be some other odd issue.) If

download()throws aDownloadException, you should just skip downloading that document (move on, and don’t add it to the index). Your program should not crash.

Milestone 2: Multithreaded version, first draft

Update your sequential archive, spawning a thread to download and process

each Document. Take care to avoid any race conditions or deadlock. In

addition, make sure that no more than 16 threads are actively downloading

Documents at any time. We don’t want to overwhelm any destination server

with network connections.

It’s a little tricky to figure out how to wait until all downloads are

finished, since you aren’t sure how many documents you’ll need to download

until you’re completely finished crawling. There are many ways to do it, and

they vary wildly in code complexity and in performance characteristics. (Try to

think of at least two ways.) I don’t want you to spend much time figuring this

out in this milestone, because the way you wait for completion will be

different in Milestone 4, so I will tell you this: in each thread that

downloads a Document with outgoing links (let’s call it thread x), spawn

child threads that each parse a linked URL. In x, declare a local vector of

threads, add the child threads to that vector, and after spawning threads for

all the outgoing links, have x join all the threads.

Note that this isn’t great. This can create very deep thread hierarchies (the main thread might have a child thread with a child thread with a child thread with a child thread… and so on…), and because the parent threads will be waiting around until their child threads exit, we could possibly exceed the maximum number of threads with idle parent threads. We’ll fix things up in Milestone 4, but I want you to first get your hands dirty with synchronization primitives.

Milestone 3: Implementing a Thread Pool

Note: I expect you to spend the majority of your time on this milestone.

Think back to farm from Assignment 2. You created several worker processes,

then distributed work to each worker as it finished its previous work. There

were only eight child processes ever created, but each child factored several

numbers when there was a lot of work to be done.

An easier approach would have been as follows: every time you had a number to

factor, you could fork to launch a worker to factor that number. You could

have implemented it such that a maximum of 8 workers were running at a time (on

an 8-core machine), and some might argue that this would be just as good as

your implementation, since this avoids contention for hardware resources just

like your implementation did. However, this approach is definitely worse,

because even if it has the same number of processes running at a time, it

creates many more processes over the entire execution of the program. Creating

processes is relatively expensive, so if we can reuse processes to do work, we

should do so.

As I noted, your Milestone 2 code has some big issues with the number of

threads it keeps around, but even if we fixed up those issues, it would be like

this latter inefficient farm implementation. Even if you only have 16 worker

threads running at one time, that implementation necessitates creating 1000

worker threads in total to download 1000 URLs. We can do better.

The solution is to launch 16 threads at the start of a program. These threads are part of a thread pool. Work can be added to the pool and one of the threads will wake up to do the work. Concretely, we can add functions to the thread pool’s queue, and one of the threads will wake up, execute the function we added, and then go back to sleep. (Note: specifically, thunks are added to the queue. Thunks are just functions that take no parameters and return no values.)

The thread pool concept is practically inescapable in software engineering; you’ll see it in database libraries, web servers, some graphics rendering engines, and more. The code we’re implementing is quite general-purpose, and the concepts involved will serve you well.

How ThreadPool is used

The ThreadPool class has the following interface:

class ThreadPool {

public:

ThreadPool(size_t numThreads);

void schedule(const std::function<void(void)>& thunk);

void wait();

~ThreadPool();

};

A simple program can use this pool to execute 10 function calls across 4 threads:

static const size_t kNumThreads = 4;

static const size_t kNumFunctions = 10;

int main(int argc, char *argv[]) {

ThreadPool pool(kNumThreads);

for (size_t id = 0; id < kNumFunctions; id++) {

pool.schedule([id] {

cout << oslock << "Thread (ID: " << id << ") has started." << endl << osunlock;

size_t sleepTime = (id % 3) * 10;

sleep_for(sleepTime);

cout << oslock << "Thread (ID: " << id << ") has finished." << endl << osunlock;

});

}

pool.wait();

cout << "All done!" << endl;

return 0;

}

Implementation

Your constructor should do the following:

Launch a single dispatcher thread, which pulls work off the queue, wakes up a particular worker, and hands the function to be executed to that worker. Assume

dtis a privateThreadPoolmember of typethread:dt = thread([this]() { dispatcher(); });Launch a specific number of worker threads (assume

wtsis a privateThreadPoolmember of typevector<thread>):wts[workerID] = thread([this, workerID]() { worker(workerID); });

Your schedule function should accept a thunk (a function that takes no

parameters and returns no value – expressed as type function<void(void)>)

and append it to the end of a queue of such functions. Each time a function is

added, the dispatcher thread should be notified. Once the dispatcher is

notified, schedule should return right away so that more functions can be

scheduled. schedule should be thread-safe (i.e. if your program has more

threads that are running outside of the ThreadPool, it should be possible to

call schedule from multiple different threads and not have any chance of

encountering race conditions).

The dispatcher thread should loop almost interminably. In each iteration, it

should sleep until schedule tells it that something has been added to the

queue. It should then wait for a worker to become available, select the worker,

mark it as unavailable, dequeue a function, put a copy of that function in a

place where the worker can access it, and then signal the worker to execute it.

Reviewing the customer-cashier interactions from the ice cream parlor lecture

example may be helpful.

The worker threads should also loop repeatedly, blocking within each

iteration until the dispatcher thread signals it to execute an assigned

function. Once signaled, the worker should invoke the function, wait for it to

execute, then mark itself as available so that it can be discovered and

selected again by the dispatcher.

The wait function should wait until the ThreadPool is completely idle. It

should be possible to call wait multiple times, and wait should be

thread-safe.

The ThreadPool destructor should wait until all functions have been executed

(it’s fine to call wait), then dispose of any ThreadPool resources. Our

solution doesn’t use any dynamic memory allocation, but if you use it, then be

sure to free resources.

You can test your ThreadPool using tptest.c and tpcustomtest.c (which

compile to tptest and tpcustomtest). If you use dynamic memory allocation,

make sure that you do not leak any memory. (You shouldn’t need to.)

Can I implement ThreadPool without a dispatcher thread?

Yes; it’s quite possible to implement a scheme where workers are notified of

incoming work, and then they pull work off the queue without the dispatcher

specifically handing the work to them. However, we want you to implement

ThreadPool with a dispatcher thread. It’s better practice with thread

communication/synchronization, and the dispatcher thread is essential to

implementing more capable ThreadPools (such as a ThreadPool with lazy

initialization, where the worker threads aren’t created unless they’re actually

needed).

Milestone 4: Multithreaded version, final draft

Update your archive solution to use a ThreadPool of size 16. For each URL

you want to download, you’ll want to schedule a thunk in the pool that

downloads that thread and adds it to the index. Be careful of where you call

ThreadPool::wait; although you called thread::join in almost every thread

in Milestone 2, you will want to be more conservative here so that you’re

efficient with thread usage.

Ensure your final solution still meets the requirements presented in Milestone 1.